Documentation

Build efficient edge AI applications with Embedl Hub.

Optimize and deploy your model on any edge device with the Embedl Hub Python library:

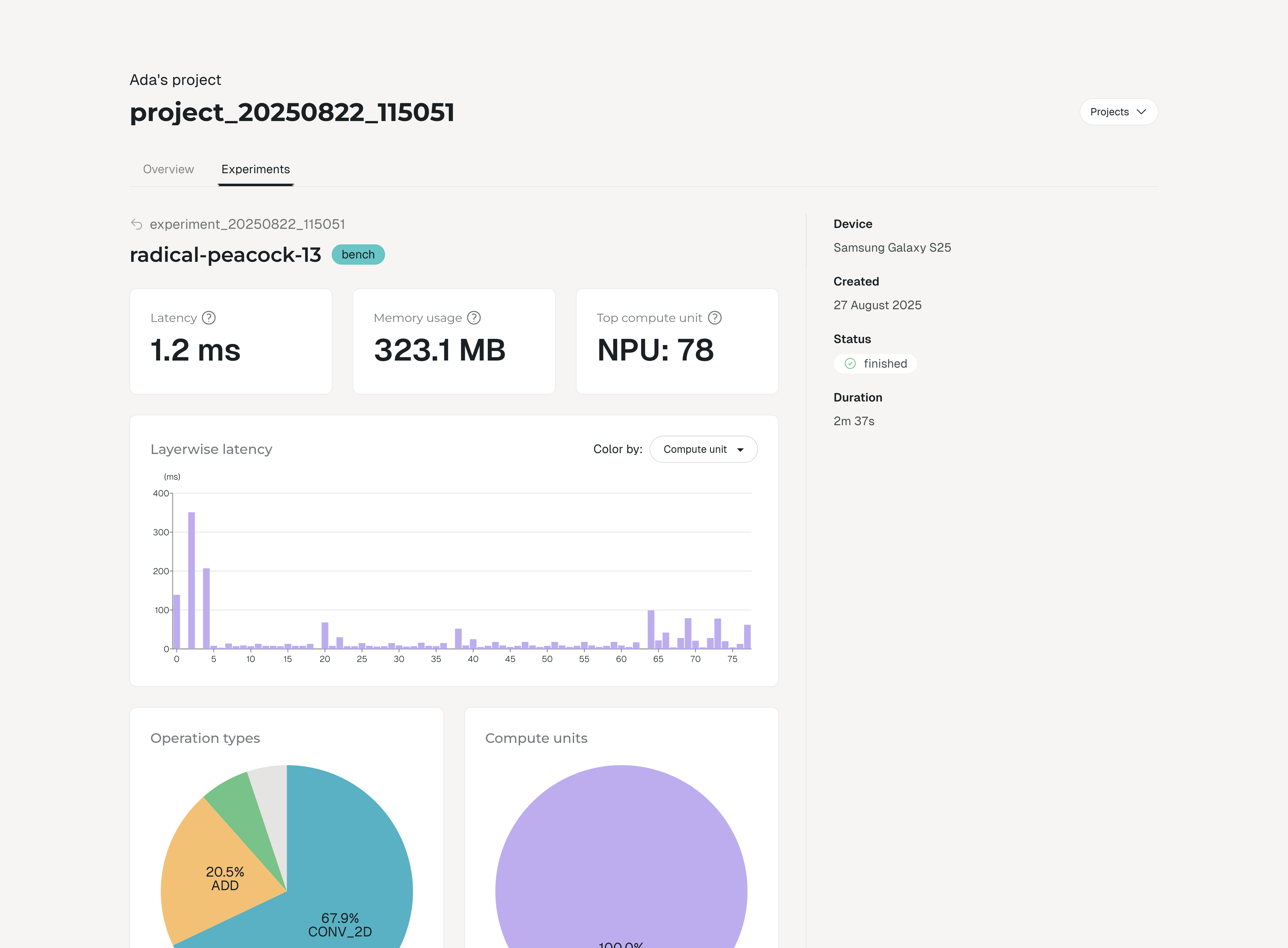

- Quantize your model for lower latency and memory usage.

- Compile your model for execution on CPU, GPU, NPU or other AI accelerators on your target devices.

- Benchmark your model's latency and memory usage on real edge devices in the cloud.

Embedl Hub logs your metrics, parameters, and benchmarks, allowing you to inspect and compare your results on the web and reproduce them later.

Ready to build?

Follow the setup guide to get started with Embedl Hub. Then, learn how to go from having an idea for an application to benchmarking a model on remote hardware in the quickstart guide.