Launch Celebration: Win a Jetson Orin Nano Super or Raspberry Pi 5!

Published

By Elina Norling

We’re excited to introduce the latest major update to Embedl Hub: our own remote device cloud!

To mark the occasion, we’re launching a community competition and giveaway. The participant who provides the most valuable feedback after using our platform to run and benchmark AI models on any device in our device cloud will win an NVIDIA Jetson Orin Nano Super. We’re also giving a Raspberry Pi 5 to everyone who places 2nd to 5th.

How to participate

- Join Embedl Hub and get started.

- Run a benchmark on any device from our device cloud and (optionally) compile and quantize the model before. This is done with the Embedl Hub Python library.

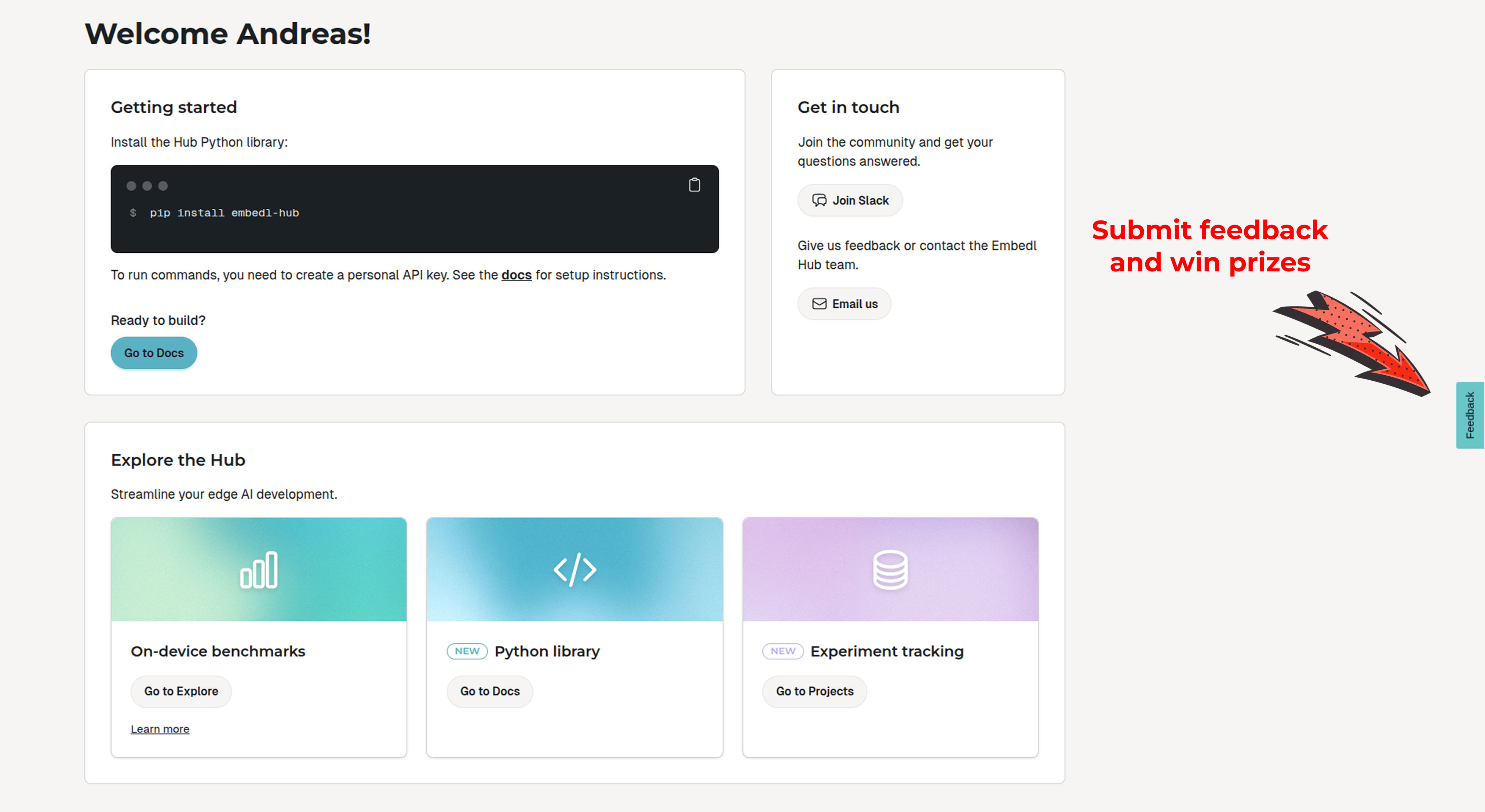

- Give us your feedback about the platform in the feedback form available after login (see image below). Once you have submitted your feedback, you enter the competition.

You choose the level of feedback you give us, but to win the Jetson Orin Nano Super, your feedback needs to be the most valuable for us in our platform development going forward. It could be a missing feature that would be useful, a friction point you discovered in the workflow after many runs, or something obvious we simply hadn’t thought of.

The winners are announced on January 20. Stay tuned!

NVIDIA Jetson Orin Nano Super at a glance

NVIDIA’s latest compact edge-AI “super developer kit,” delivering up to 67 TOPS of AI performance in a small, power-efficient form factor. Ideal for running advanced on-device AI workloads.

Raspberry Pi 5 at a glance

The newest generation of the iconic Raspberry Pi, featuring a 2.4 GHz quad-core ARM Cortex-A76 CPU, faster I/O, and significantly improved performance for modern hobby, IoT, and edge-AI projects.

Join the competition!

Get started with the quickstart guide. If you’d like help getting models running, or if you have questions or suggestions, just reach out to the team in our Slack channel.

Why we’ve built this platform

On-device AI offers many advantages; enabling low-latency interactions, offline functionality and total privacy to name a few. But running AI on an edge device is far harder than running it on a PC. One of the most common problems is that performance varies widely across devices and chipsets with different hardware limitations, such as memory constraints and battery budgets. Not every device has enough AI processing power to handle heavy workloads with real-time requirements, and this makes on-device performance inherently unpredictable; models that work perfectly on a few devices may be slow or broken on others.

For example, if you’re building a mobile app with on-device AI features, these issues often only show up after release when the app runs on user devices you never tested. This leads to frustrated users, negative reviews, and developers removing the feature entirely – losing the benefits of on-device AI. Read more about why on-device AI is important and the challenges that follow in this blog post.

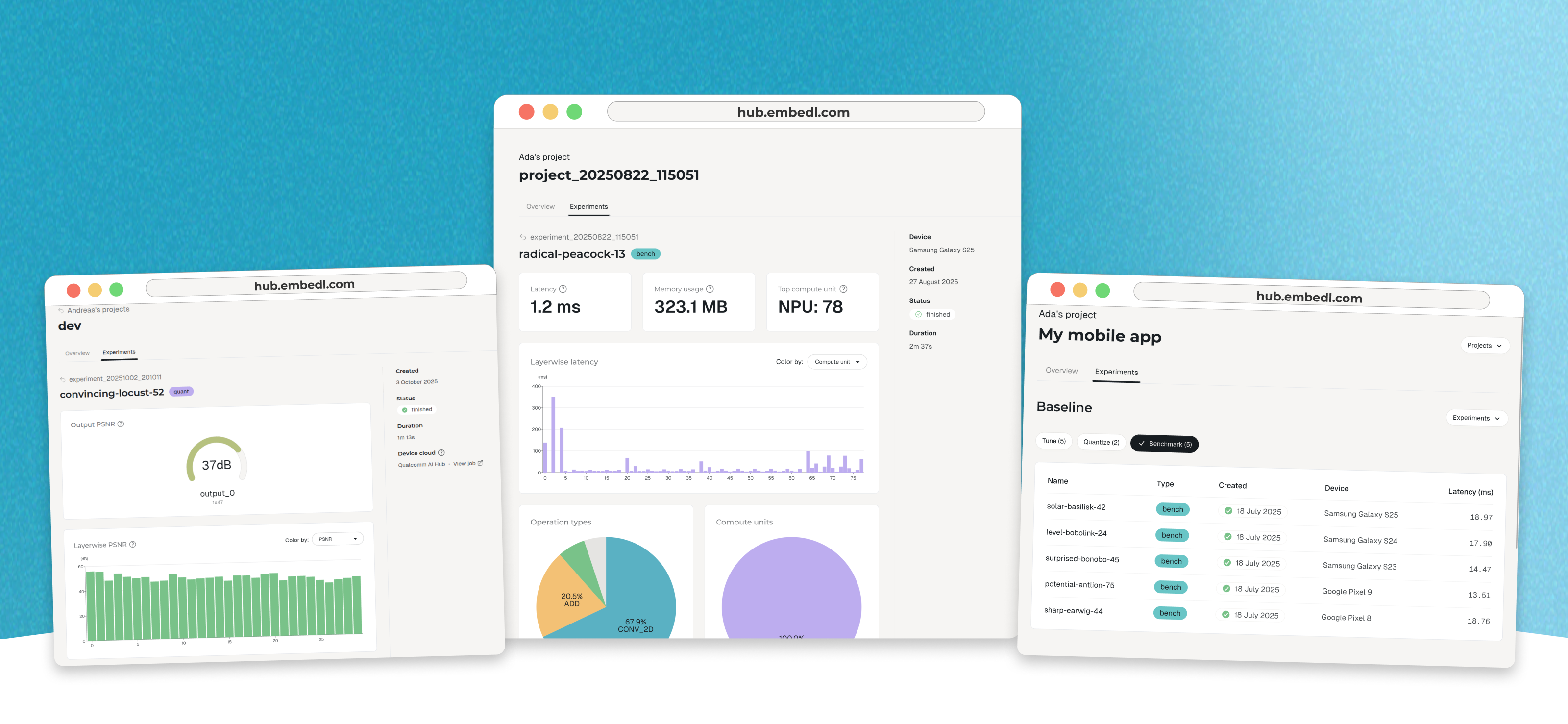

Because these issues are ones we’ve encountered ourselves, we set out to build this tool that removes this uncertainty. What you can do with the platform:

- Use layer-wise peak signal-to-noise ratio (PSNR) for better quantization

- View a history of all your submitted benchmarks

- Compare performance metrics (such as latency and memory usage, and PSNR) across different devices

- Identify which device delivers the best results for your model

- Download detailed logs and reports for further analysis

Try it out at hub.embedl.com and let us know what you think!