No fluff. Just edge AI tools that work.

Explore the full workflow.

Python Library

Optimize your model

Compile and quantize your model for efficient on-device inference.

Device Cloud

Test on real hardware

Run your model on real edge devices in the cloud.

Platform

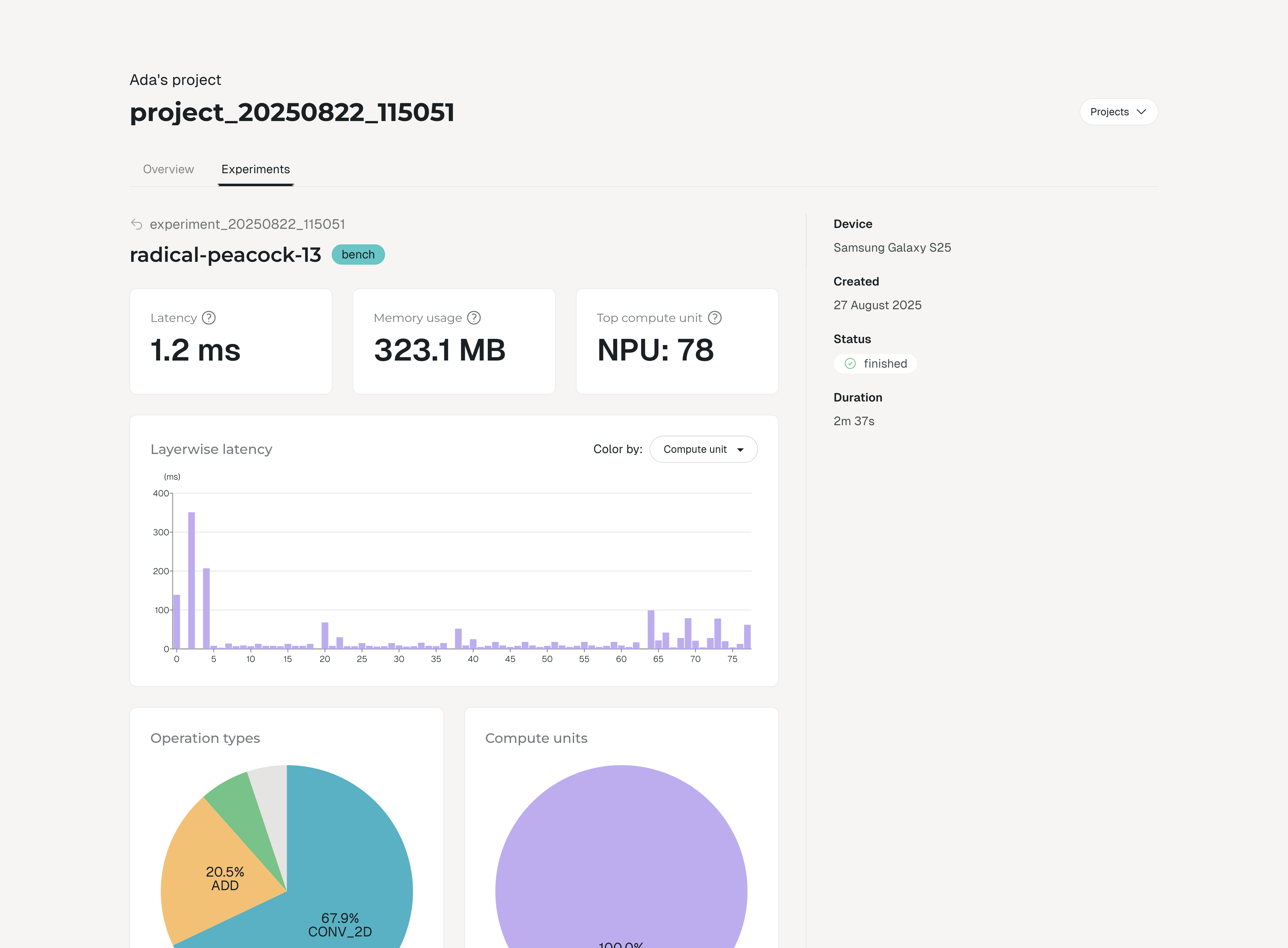

Analyze & compare

Visualize the performance of your model with our dashboards.

Optimize it. Compile it. Run it.

Deploy your model for any edge device with the Hub Python library.

Smaller faster models.

Optimize your model for lower latency and memory usage.

Target every chip.

Compile your model for execution on CPU, GPU, NPU or other AI accelerators on your target devices.

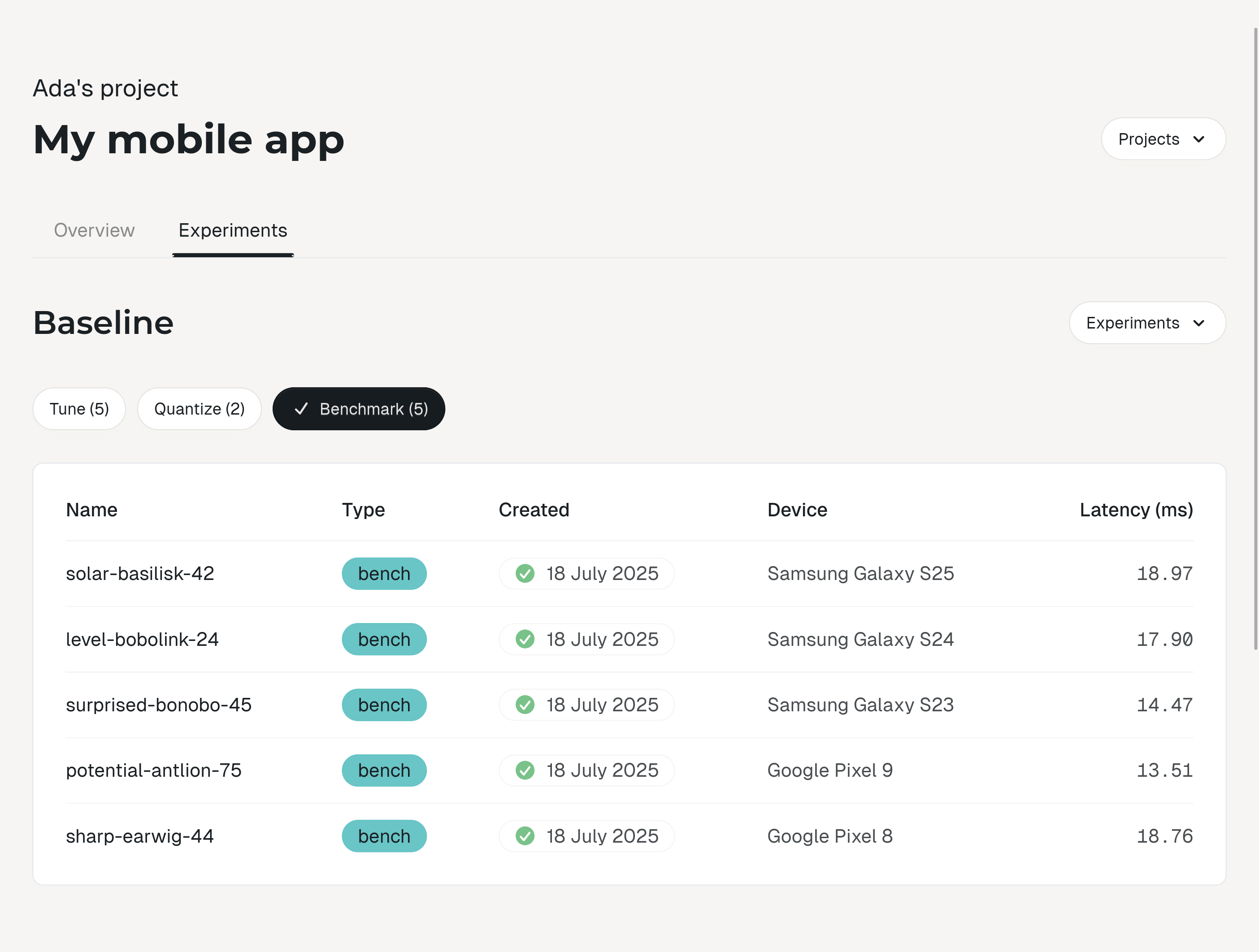

Run your own benchmarks.

Measure latency and memory usage of your model on a real edge device in the cloud.

Test your model on remote devices.

Run benchmarks and identify issues early in development.

Snapdragon 8 Elite (SM8750)

Samsung Galaxy S25Samsung Galaxy S25 UltraSnapdragon 8 Elite QRD

8 Elite

Tensor G4 (GS401)

Google Pixel 9Google Pixel 9 ProGoogle Pixel 9 Pro XL

G4

Exynos 1330 (S5E8535)

Samsung Galaxy A14 5G

1330

Snapdragon 8 Gen 3 (SM8650)

Samsung Galaxy S24Samsung Galaxy S24+Samsung Galaxy S24 Ultra

8 Gen 3

Tensor G3 (GS301)

Google Pixel 8Google Pixel 8 Pro

G3

Exynos 1280 (S5E8825)

Samsung Galaxy A53 5G

1280

Snapdragon 8 Gen 2 (SM8550)

Samsung Galaxy S243Samsung Galaxy S23+Samsung Galaxy S23 Ultra

8 Gen 2

Snapdragon 8 Gen 1 (SM8450)

Samsung Galaxy S22 Ultra 5GXiaomi 12 ProSamsung Galaxy Tab S8

8 Gen 1

Snapdragon 888 (SM8350)

Samsung Galaxy S21Samsung Galaxy S21+Samsung Galaxy S21 Ultra

888

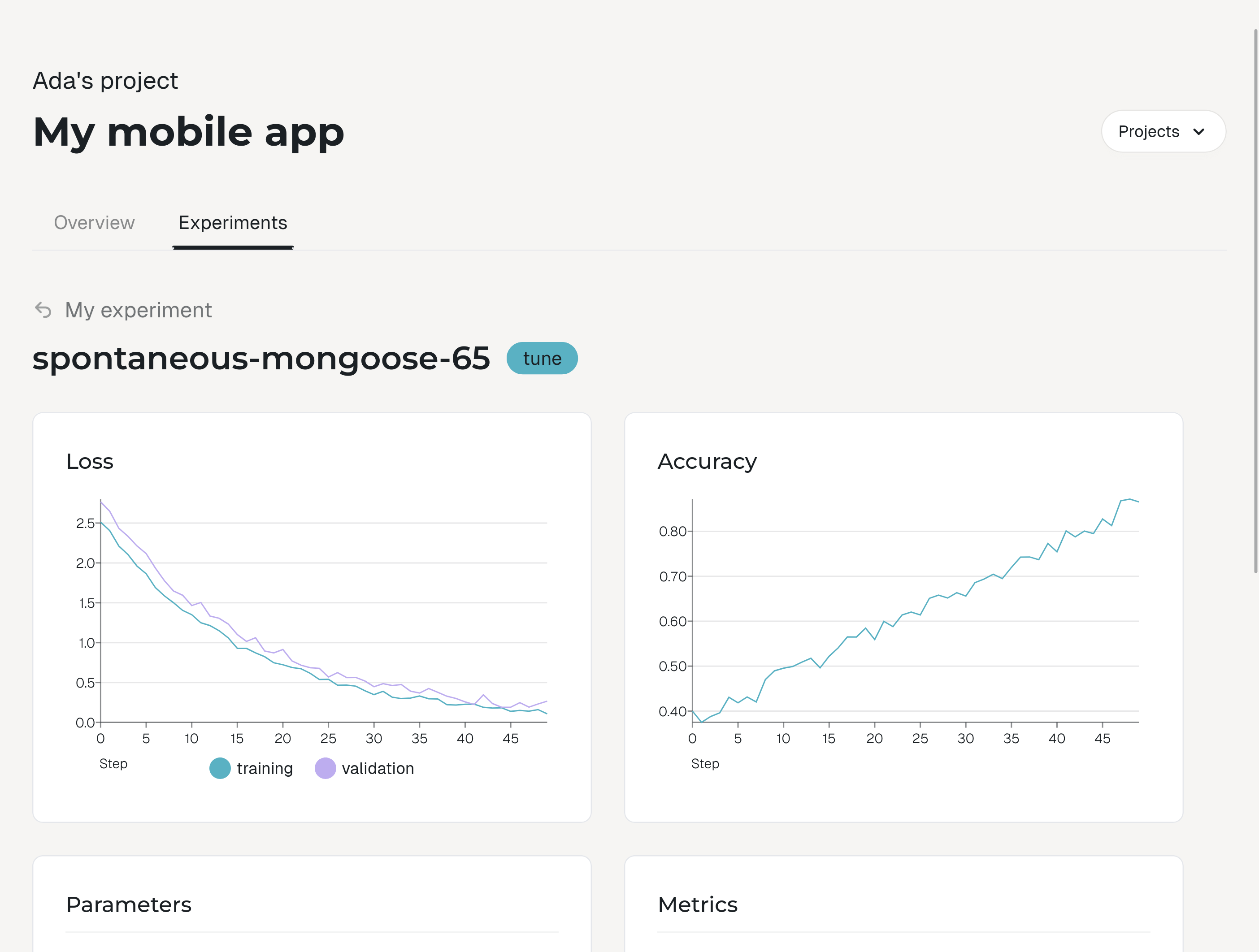

All your results in one place.

Analyze and visualize your experiments on the web.